Cambridge University researchers reveal why people believe malicious, fake security messages and ignore real warnings.

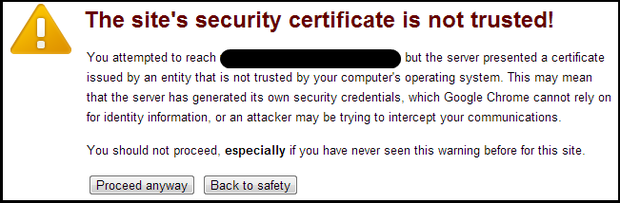

How do you react to the following warning when it pops up on your screen?

I have yet to find a person who always obeys the above warning, but the warning below has proven very effective, even though it’s a complete fake. Why?

This is a question two University of Cambridge researchers try to answer in their paper, Reading This May Harm Your Computer: The Psychology of Malware Warnings. Professor David Modic and Professor Ross Anderson, authors of the paper, took a long hard look at why computer security warnings are ineffective.

Warning message overload

The professors cite several earlier studies which provide evidence that users are choosing to ignore security warnings. I wrote about one of the cited studies authored by Cormac Herley, where he argues:

- The sheer volume of security advice is overwhelming.

- The typical user does not always see the benefit from heeding security advice.

- The benefit of heeding security advice is speculative.

The Cambridge researchers agree with Herley, mentioning in this blog post:

“We’re constantly bombarded with warnings designed to cover someone else’s back, but what sort of text should we put in a warning if we actually want the user to pay attention to it?”

I can’t think of a better example of what Herley, Anderson, and Modic were referring to than my first example: the “site’s security certificate is not trusted” warning.

Warning messages are persuasive

Anderson and Modic also looked at prior research dealing with persuasion psychology, looking for factors that influence decision-making. Coming up with the following determinants:

- Influence of authority: Warnings are more effective when potential victims believe that they come from a trusted source.

- Social influence: Individuals will comply if they believe that other members of their community also comply.

- Risk preferences: People in general tend to act irrationally under risky conditions.

Use what works for the bad guys

In order to find out what users will pay attention to, Anderson and Modic created a survey with warnings that played on different emotions, hoping to see which warnings would have an impact. In an ironic twist, the researchers employed the same psychological factors already proven to work by the bad guys:

“[W]e based our warnings on some of the social psychological factors that have been shown to be effective when used by scammers. The factors which play a role in increasing potential victims’ compliance with fraudulent requests also prove effective in warnings.”

The warnings used in the survey were broken down into the following types:

- Control Group: Anti-malware warnings that are currently used in Google Chrome.

- Authority: The site you were about to visit has been reported and confirmed by our security team to include malware.

- Social Influence: The site you were about to visit includes software that can damage your computer. The scammers operating this site have been known to operate on individuals from your local area. Some of your friends might have already been scammed. Please, do not continue to this site.

- Concrete Threat: The site you are about to visit has been confirmed to include software that poses a significant risk to you. It will try to infect your computer with malware designed to steal your bank account and credit card details in order to defraud you.

- Vague Threat: We have blocked your access to this page. It is possible the page contains software that may harm your computer. Please close this tab and continue elsewhere.

The research team then enlisted 500 men and women through Amazon Mechanical Turk to participate in the survey, recording how much influence each warning type had on participants.

People respond to clear, authoritative messages

Anderson and Modic expressed surprise that social cues did not have the impact they expected. The warnings that worked the best were specific and concrete. Such as messages declaring that the computer will become infected by malware, or a certain malicious website will steal the user’s financial information. Anderson and Modic suggest the software developers who create warnings should heed the following advice:

- Warning text should include a clear and non-technical description of the possible negative outcome.

- The warning should be an informed direct message given from a position of authority.

- The use of coercion (as opposed to persuasion) should be minimized, as it is likely to be counterproductive.

The bottom line according to Anderson and Modic, “Warnings must be fewer, but better.” And from what I read in the report, the bad guys are doing a superior job when it comes to warnings, albeit for a different reason.

Via: techrepublic

Leave a Reply