Facebook says it’s not using its new Nearby Friends feature to target ads yet, but after I asked why it’s tracking “Location History” it admitted it will eventually use the data for marketing purposes.

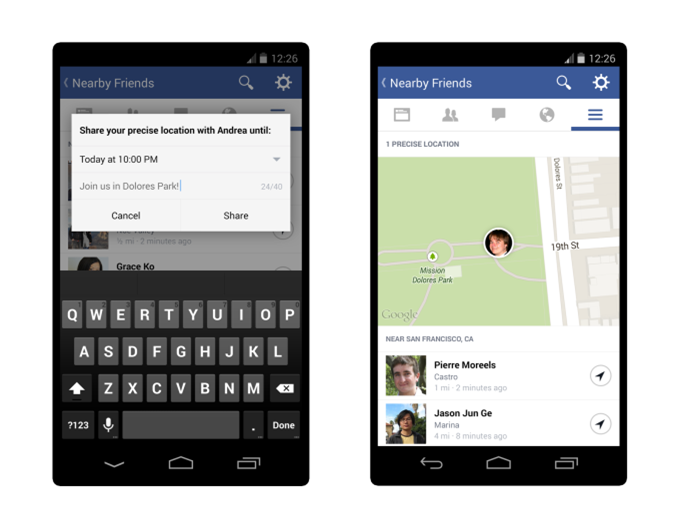

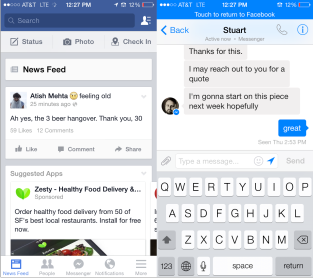

This morning, the proximity sharing feature began rolling out to iOS users after its launch, and with it I discovered a new “Location History” setting that must be left on to use Nearby Friends.

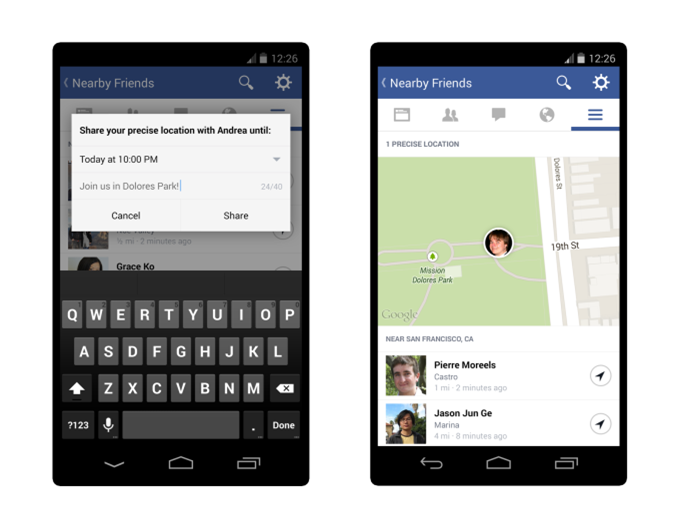

For an overview of how the Nearby Friends option lets you share your proximity or real-time exact location, and its privacy implications, read feature article from launch.

The description below the Location History setting in Nearby Friends reads “When Location History is on, Facebook builds a history of your precise location, even when you’re not using the app. See or delete this information in the Activity Log on your profile.” Notice the careful use of ‘builds a history’ instead of the scarier word ‘tracks’.

Behind the Learn More link, Facebook explains that you can turn this tracking off but, “Location History must be turned on for some location feature to work on Facebook, including Nearby Friends.” It also notes that “Facebook may still receive your most recent precise location so that you can, for example, post content that’s tagged with your location or find nearby places.” So even if you turn it off, Facebook will still collect location data when necessary it did before Nearby Friends debuted.

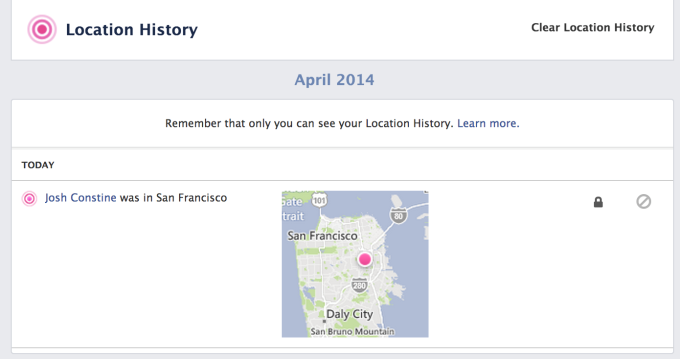

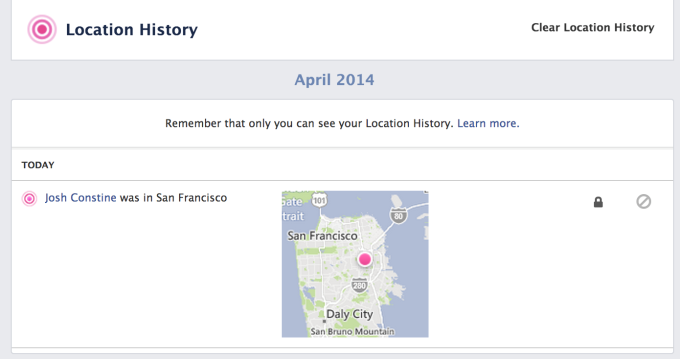

If you leave it on, you’ll see your coordinates periodically added to your Activity Log. However, you’ll only see your Location History if you scroll to the bottom of the filter options and look at this category of data specifically. It’s a bit sketchy that these maps don’t show up in the default view of Activity Log like most other actions. It’s almost like Facebook is trying to discourage use of the Clear Location History button:

There are plenty of ways Facebook could use this data to make your experience better. The company says “Location History helps us know when it makes the msot sense to notify you (for example, by making sure we don’t send you a notification every time a Facebook friend who works with you is also in the office).”

By tracking where you are, Facebook could show you more relevant News Feed stories from friends or Pages nearby. For example, if Facebook sees I’m in the North Beach district of San Francisco instead of my home district of the Mission in the South of the city, it might increase the likelihood that I’d see status updates from friends who live up there, or an Italian restaurant in the area whose Page I Liked. It could also suggest Events happening nearby.

If I travel far from home, say to London, Facebook could show more posts from friends who live there or Pages based in the U.K. It could also know not to show me checkins or Events from home that would be irrelevant while I’m away.

Location Advertising

But there are also big opportunities for Facebook to use Location History to help itself make money. When I asked if it could power advertising, a Facebook spokesperson told me “at this time it’s not being used for advertising or marketing, but in the future it will be.”

It wouldn’t confirm exactly how, but I foresee it targeting you with ads for businesses that could actually be in sight or just a few hundred feet away. An ad for a brick-and-mortar clothing shop would surely be more relevant if shown when you’re on the same block. The ability to generate foot traffic that leads to sales could let Location History-powered Facebook ads generate big returns on investment for meatspace business advertisers. That means they’d be willing to pay more for these hyper-local ads than for ones pointed to users who are far away and much less likely to visit their store.

Facebook’s own VP Carolyn Everson discussed how she imagined Facebook ads might evolve in a call with Bloomberg in 2012, saying “Phones can be location-specific so you can start to imagine what the product evolution might look like over time, particularly for retailers”. And back in 2011, Facebook acquired a hyperlocal ad targeting startup called Rel8tion.

Luckily, Facebook says that putting Location History in the Activity Log “gives people a way to view and delete the underlying data.” That’s a relief, as it means you could use Nearby Friends with Location History turned on and then clear your history in the Activity Log to prevent that data from being used for marketing if you’re really concerned about that. The company explains that “When you hit delete we remove data from the user interface immediately and start working to permanently delete the data from the system.”

While there’s always a vocal minority angry about Facebook’s aggressive ad targeting, and the wider public is generally uneasy about it, we’ve seen that these feelings don’t necessarily change people’s behavior in the long-term. That’s why Facebook is still thriving with big revenues. And over time I believe the world will get more comfortable with ad targeting. There’s going to be ads on Facebook no matter what, and I personally would rather see relevant ones for local businesses than ads for random apps or websites.

Still, the realization that Location History will be used for ad targeting could scare some people away from using Nearby Friends.

This sentiment is dangerous for Facebook because Nearby Friends is off by default and users have to actively turn it on. It’s also stuck in the navigation menu of its main iOS and Android apps. If users turn it off or refuse to turn it on in the first place, it may be too buried for them to voluntarily go in and activate it.

Facebook may have anticipated this, which could partly be why it plans to use News Feed teaser stories like “4 friends are nearby” to try to coax users into turning on Nearby Friends and Location History. With both social and marketing privacy concerns looming, though, Facebook will have to clearly demonstrate that Nearby Friends makes your life better by helping you gather with friends, or people won’t give up their data to use it.

Via: techcrunch

Given that we’re talking about the entities that supply power to governments, cities and consumers around the globe, the knowledge that their protections from cyber-attacks aren’t even considered adequate is fairly alarming. This doesn’t mean that a Die Hard-style takedown of the United States’ power-grid is imminent. However, it does point to a few key facts that security professionals working with energy and utilities have been discussing for a few years now.

Given that we’re talking about the entities that supply power to governments, cities and consumers around the globe, the knowledge that their protections from cyber-attacks aren’t even considered adequate is fairly alarming. This doesn’t mean that a Die Hard-style takedown of the United States’ power-grid is imminent. However, it does point to a few key facts that security professionals working with energy and utilities have been discussing for a few years now.